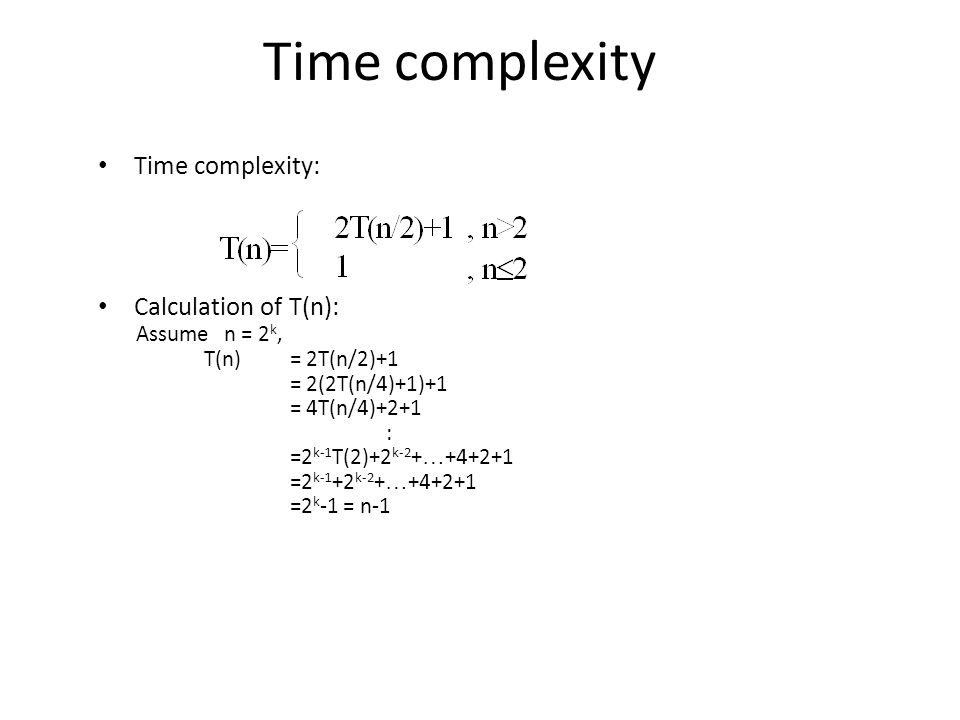

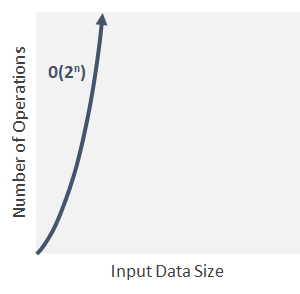

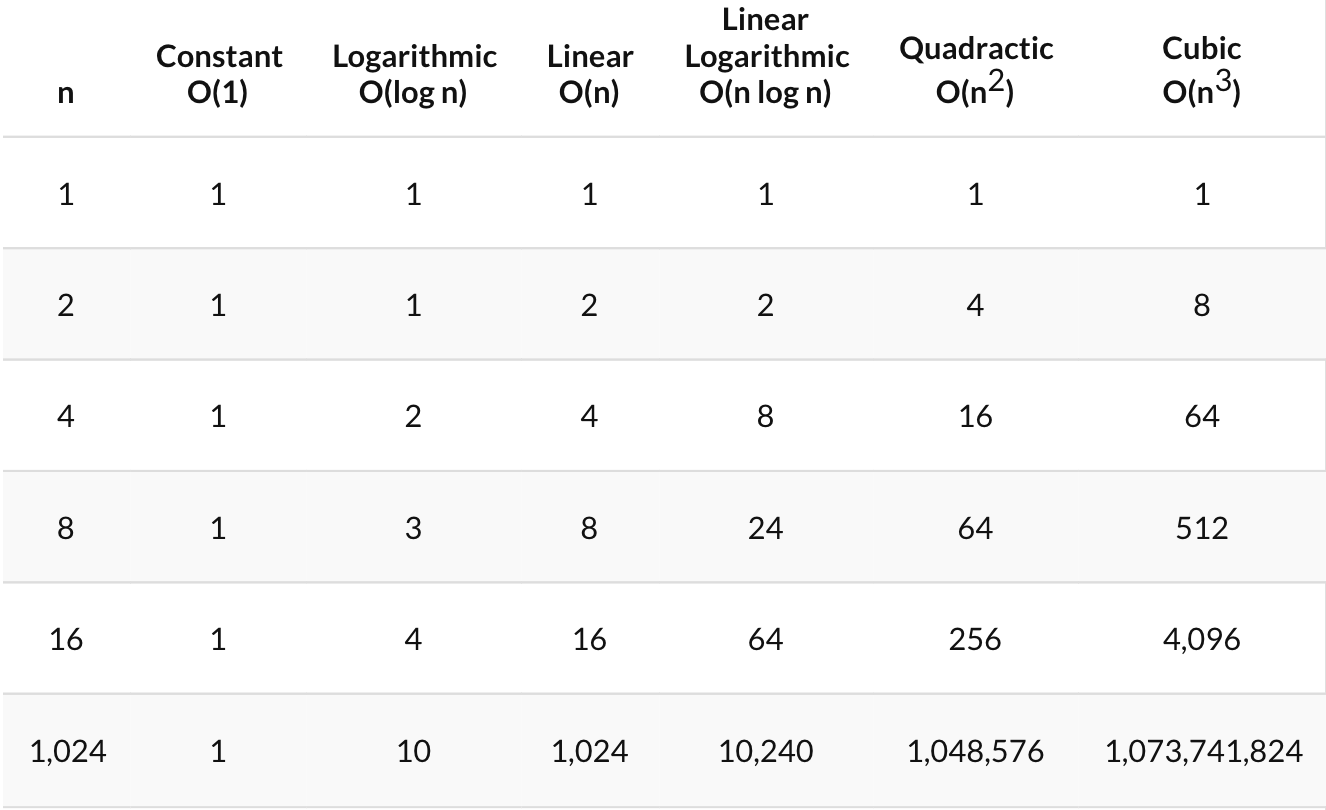

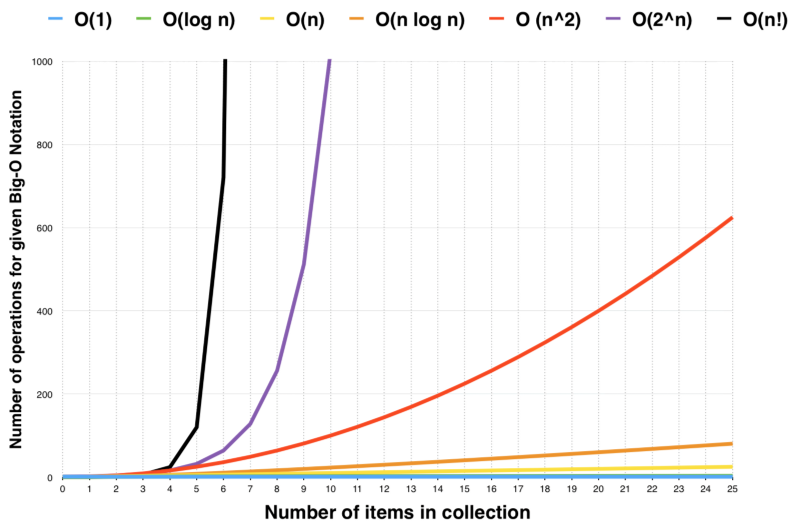

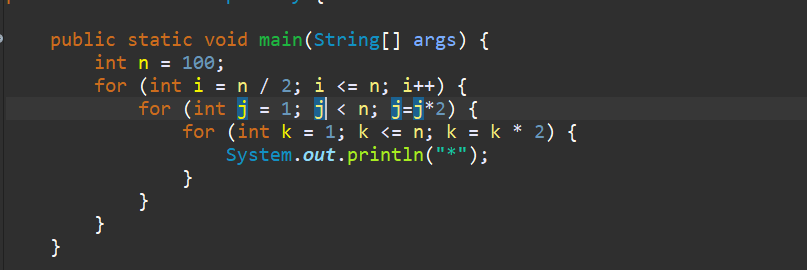

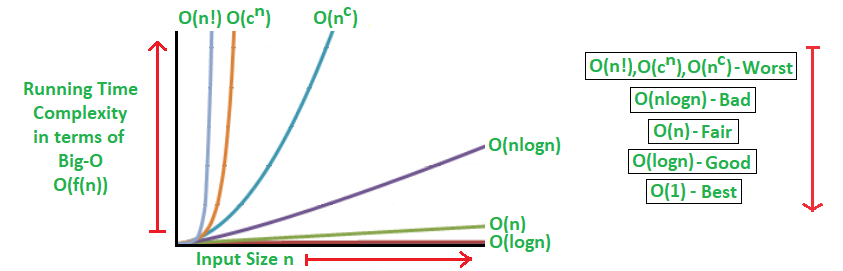

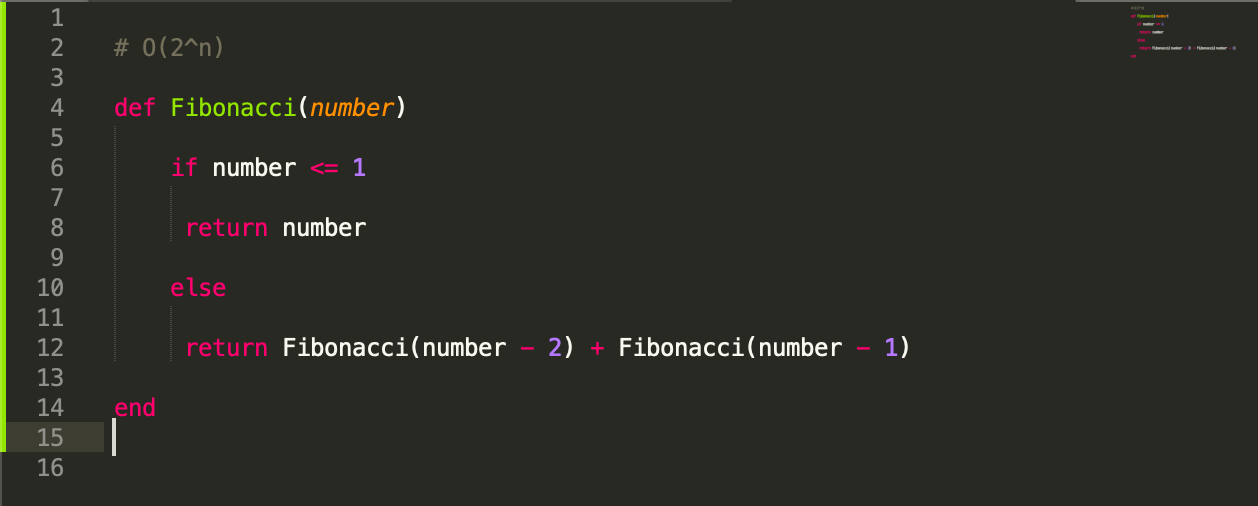

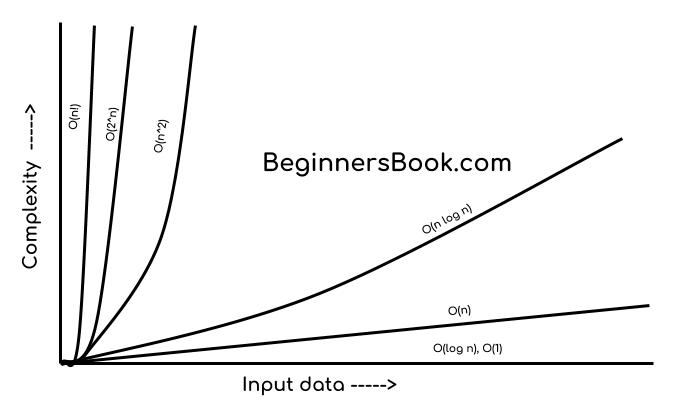

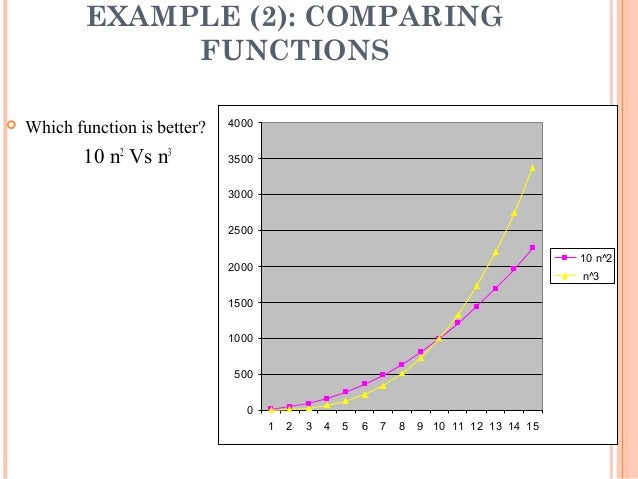

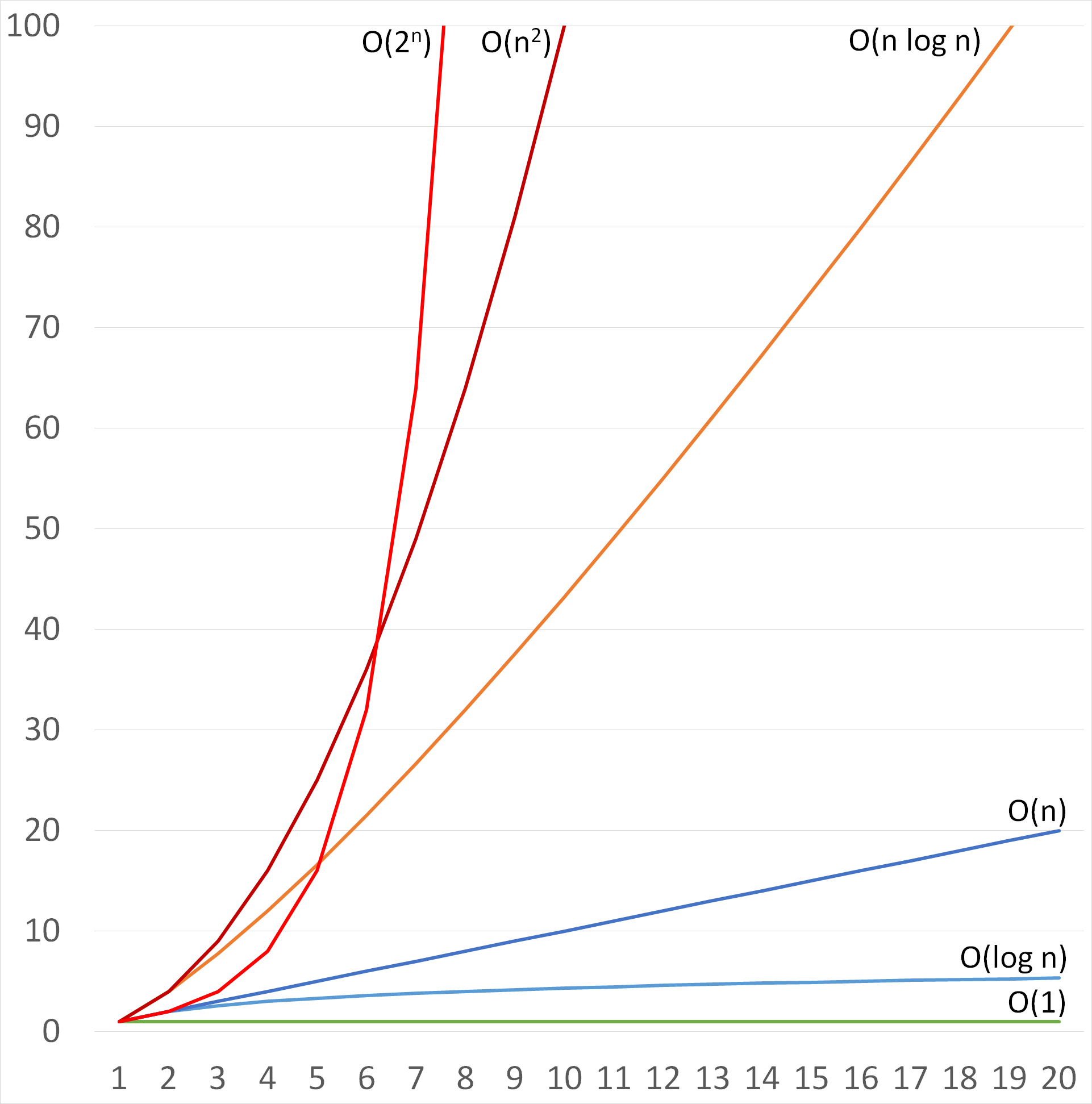

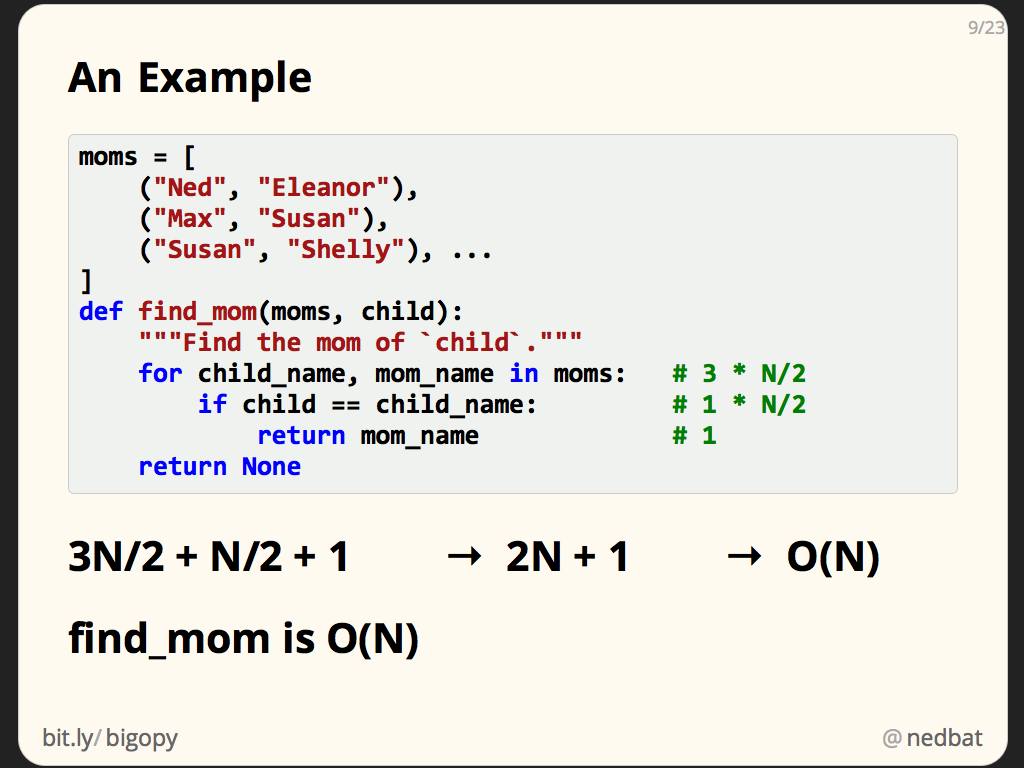

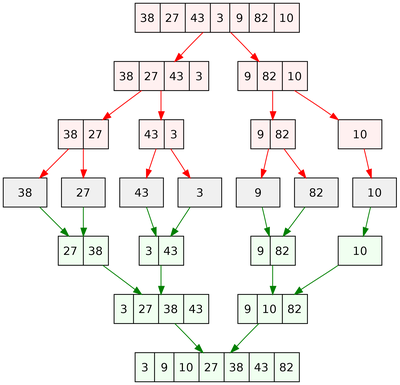

For example, suppose algorithm 1 requires N 2 time, and algorithm 2 requires 10 * N 2 N time For both algorithms, the time is O(N 2 ), but algorithm 1 will always be faster than algorithm 2 In this case, the constants and loworder terms do matter in terms of which algorithm is n log 2 2 n 1 = n Finally, we can see that recursion runtime from step 2) is O(n) and also the nonrecursion runtime is O(n) So, we have the case 2 O(n log b a log(n)) O(n log 2 2 log(n)) O(n 1 log(n)) O(n log(n)) 👈 this is running time of the merge sort O(2^n) Exponential timeValid, yes You can express any growth/complexity function inside the BigOh notation As others have said, the reason why you do not encounter it really often is because it looks sloppy as it is trivial to additionally show that mathn/2 \in O(n

Big O Notation Understanding Time Complexity Using Flowcharts Dev Community

2^n time complexity example

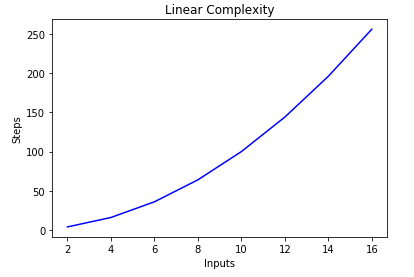

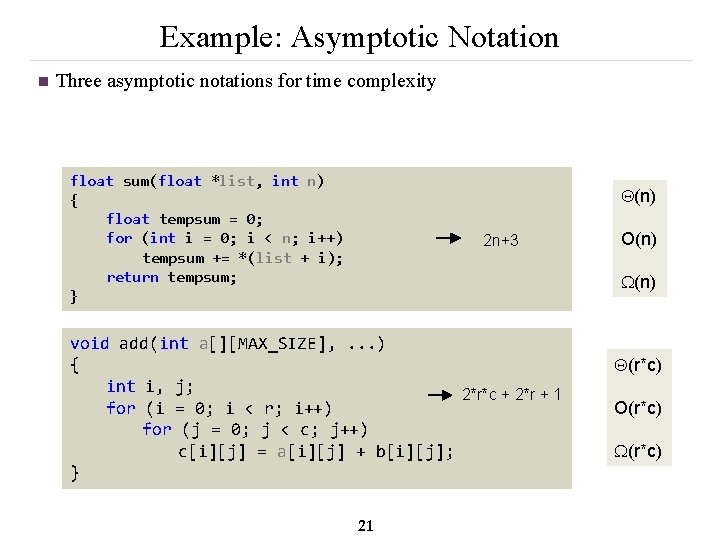

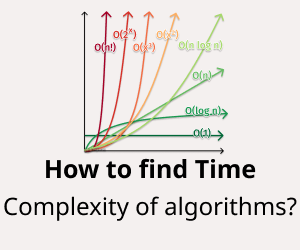

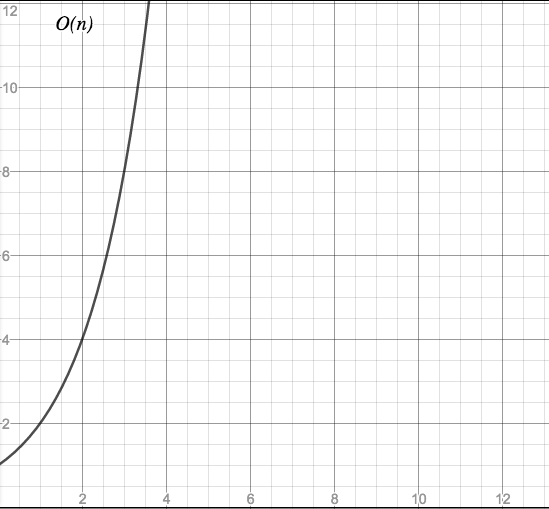

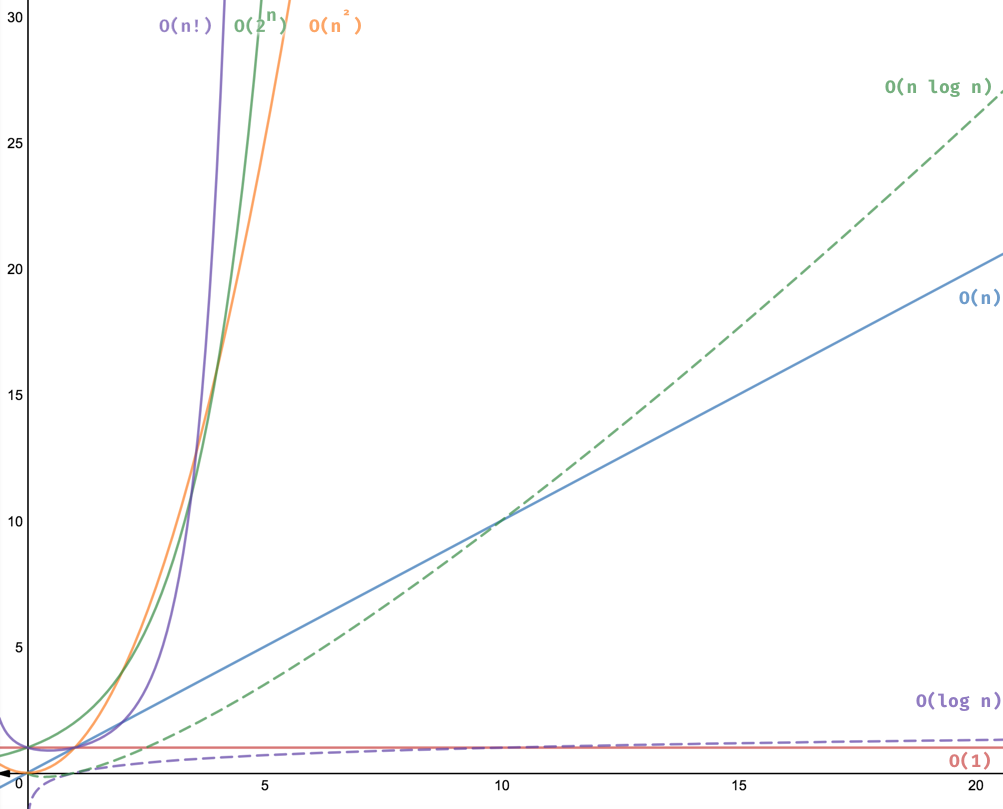

2^n time complexity example-Some timecomplexity classes We list the most commonly used timecomplexity classes and a few algorithms that lie in each For a more com 2 n Examples Binary search in an array of size n;Quasilinear Time O (n log n) When each operation in the input data have a logarithm time complexity Quadratic Time O (n^2) When it needs to perform a linear time operation for each value in the input data Exponential Time O (2^n) When the growth doubles with each addition to the input data set

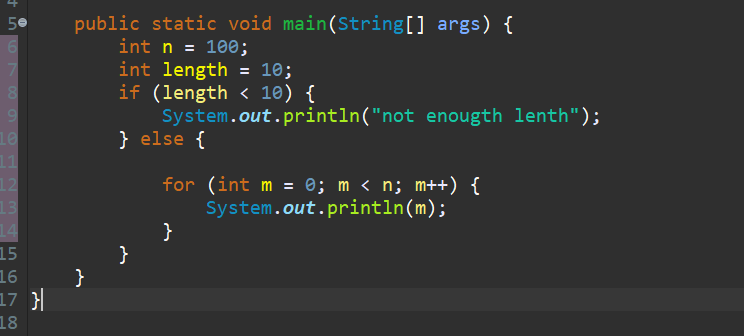

Algorithm Time Complexity Of Iterative Program Example 11 Youtube

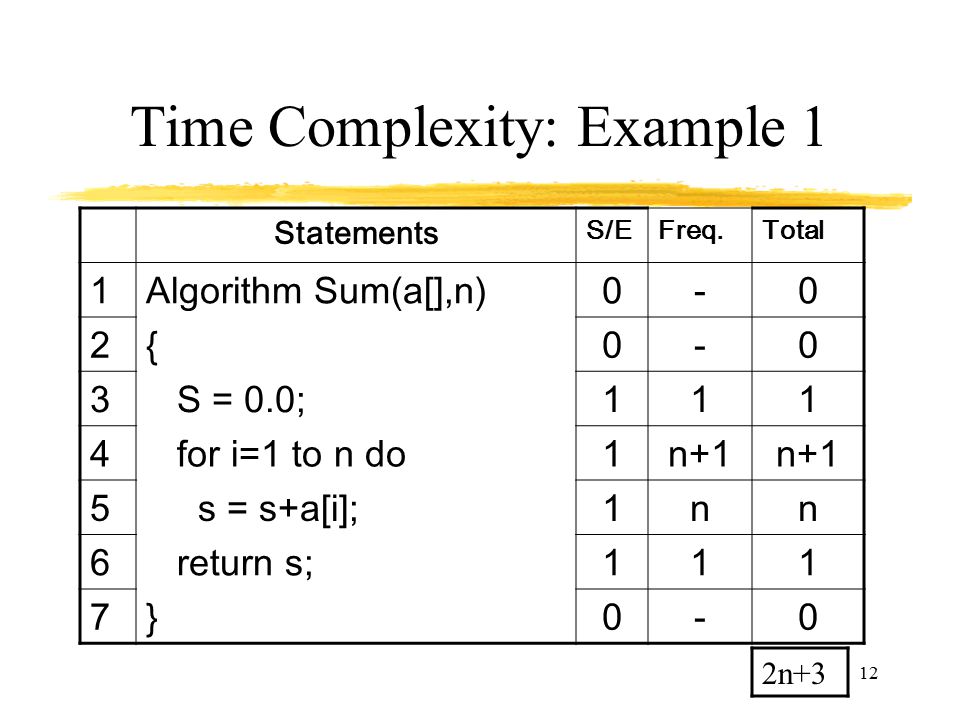

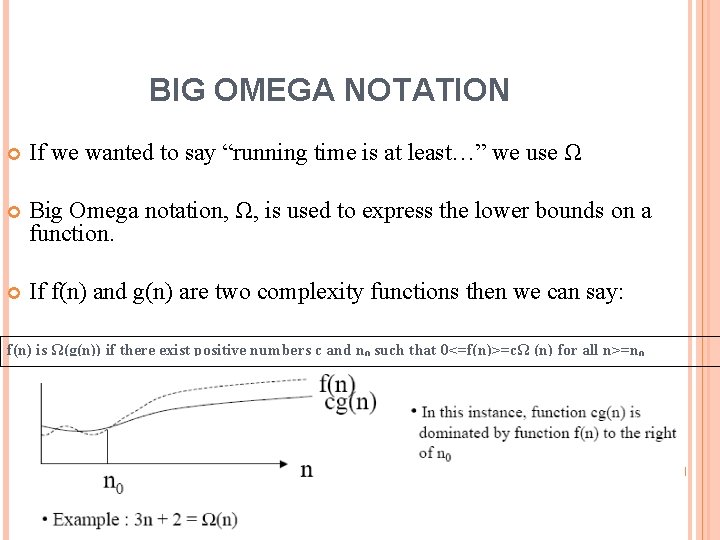

The averagecase time complexity is then defined as P 1 (n)T 1 (n) P 2 (n)T 2 (n) What is peek in stack? n is the number of elements that the function receiving as inputs So, this example is saying that for n inputs, its complexity is equal to n 2 Comparison of the Common ComplexitiesOther examples of algorithms with Logarithmic Time complexity are Finding the Binary equivalent of a decimal number > Log 2 (n) Finding the Sum of Digits of a number > Log 10 (n) Note that in these algorithms the time complexity is not based on the "number of elements" rather the "size of the input" Other Common Time Complexities

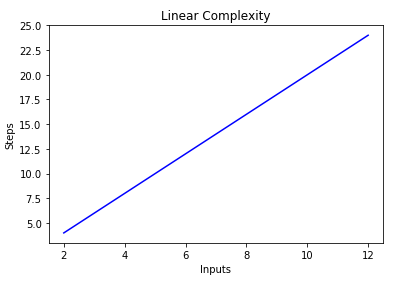

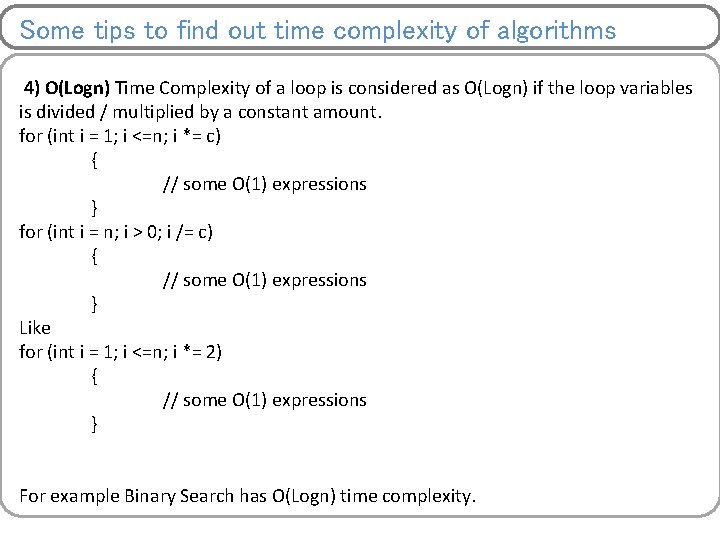

Thoughts on Complexity • Algorithm can affect time complexity • Computational model can affect complexity • Non determinism can affect complexity • Encoding of data (base 1 vs base 2) can affect complexity • For expressivity, all reasonable models are equivalent • For complexity many things can change the complexity class The time complexity begins with a lower level of difficulty and gradually increases till the conclusion Let's discuss it with an example Example 5 The recursive computation of Fibonacci numbers is an example of an O({2}^{n}) function The method O({2}^{n}) doubles in size with each addition to the input data set We learned O(n), or linear time complexity, in Big O Linear Time Complexity We're going to skip O(log n), logarithmic complexity, for the time being It will be easier to understand after learning O(n^2), quadratic time complexity Before getting into O(n^2), let's begin with a review of O(1) and O(n), constant and linear time complexities

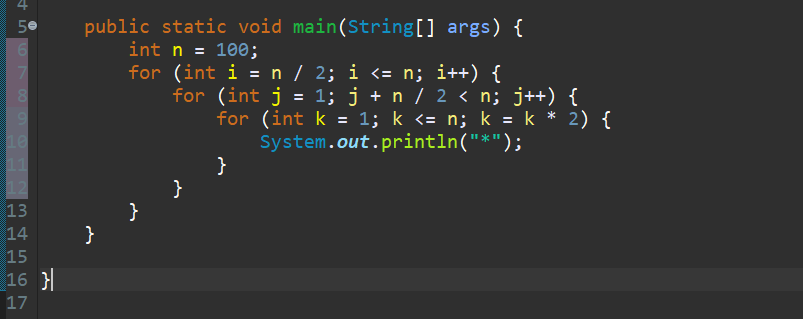

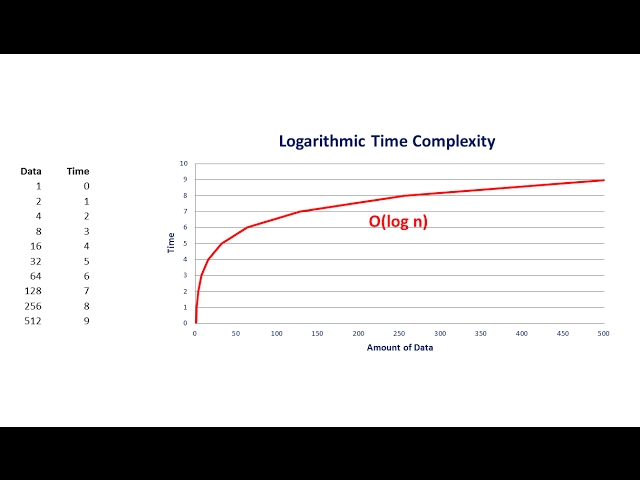

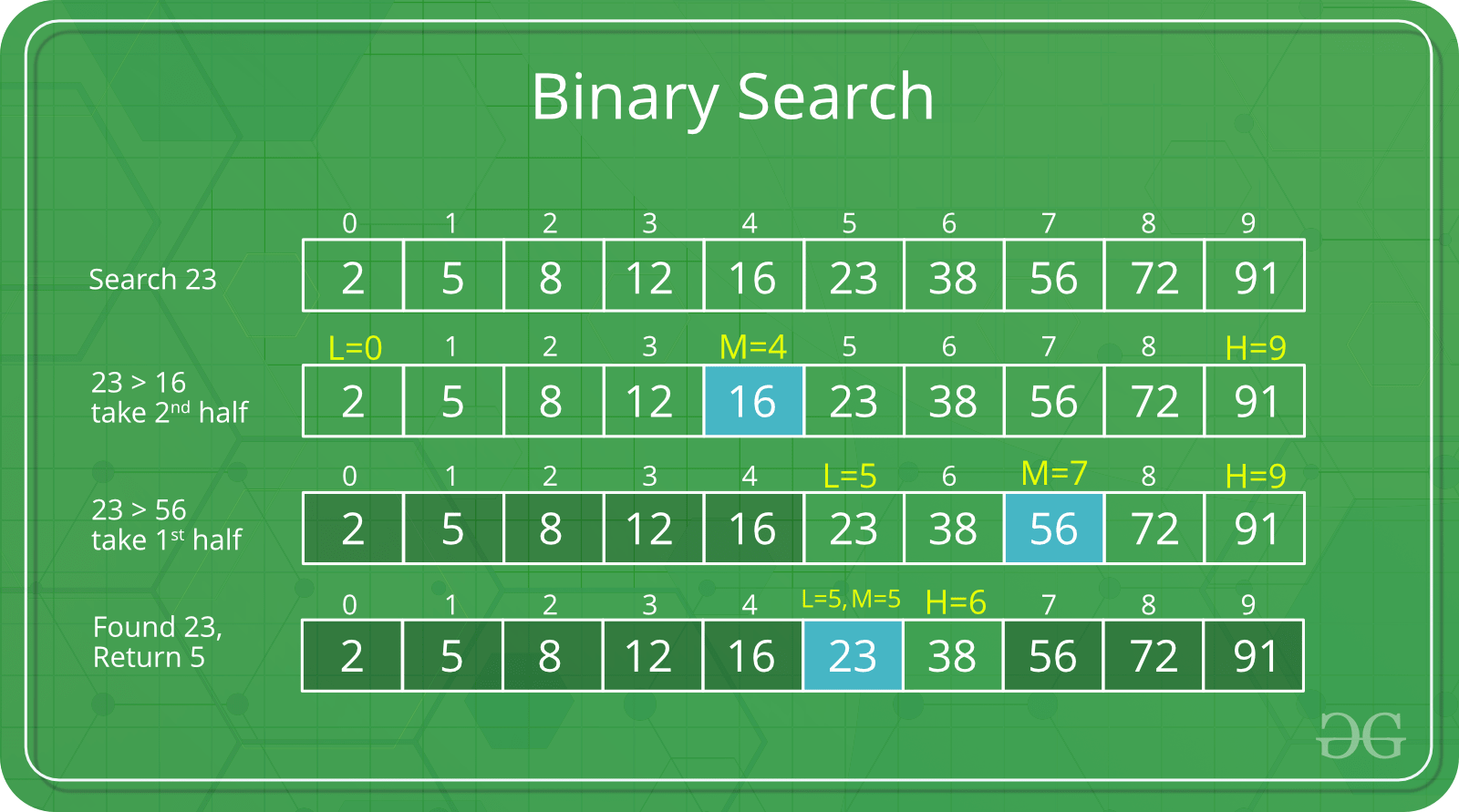

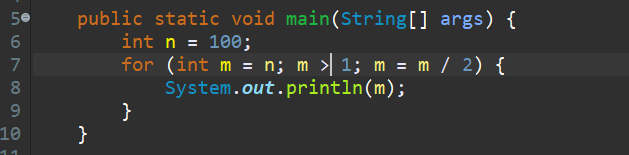

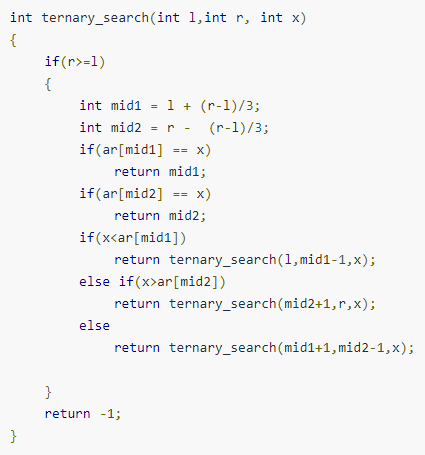

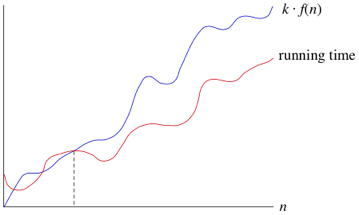

The running time of the algorithm is proportional to the number of times N can be divided by 2(N is highlow here) This is because the algorithm divides the working area in half with each iteration void quicksort(int list, int left, int right) { int pivot = partition(list, left, right);The time complexity of this naive recursive solution is exponential (2^n) In the following recursion tree, K () refers to knapSack () The two parameters indicated in the following recursion tree are n Thus, the time complexity of this recursive function is the product O(n) This function's return value is zero, plus some indigestion Worst case time complexity So far, we've talked about the time complexity of a few nested loops and some code examples Most algorithms, however, are built from many combinations of these

Example Of O 2 N Complexity Beyond Corner

Big O Notation And Algorithm Analysis With Python Examples Stack Abuse

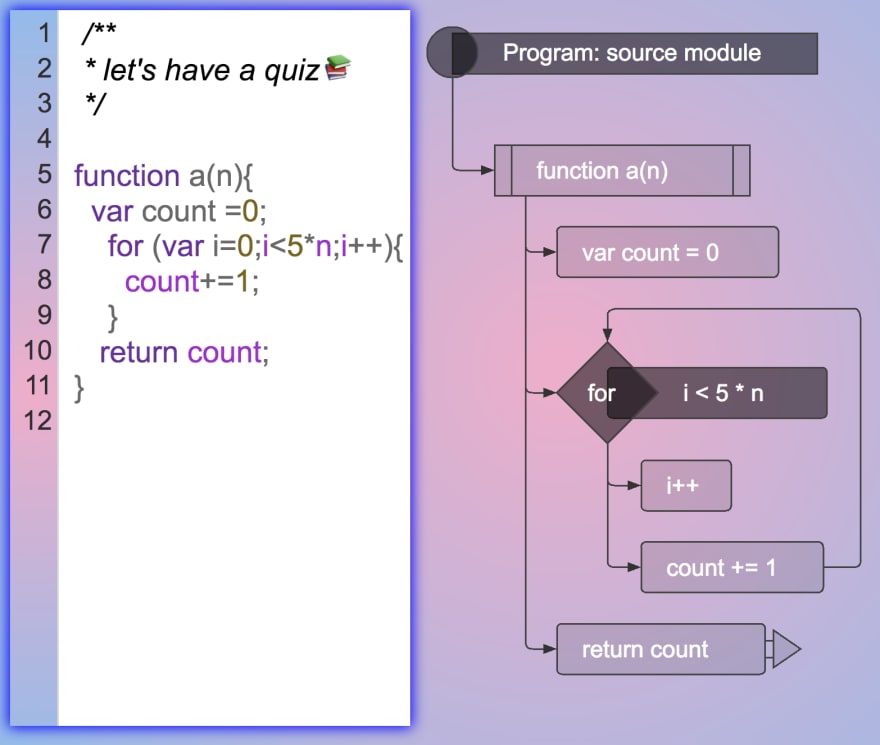

Before getting into O(n), let's begin with a quick refreshser on O(1), constant time complexity O(1) Constant Time Complexity Constant time compelxity, or O(1), is just that constant Regardless of the size of the input, the algorithm will always perform the same number of operations to return an output Here's an example we used in theO(n^2) polynomial complexity has the special name of "quadratic complexity" Likewise, O(n^3) is called "cubic complexity" For instance, brute force approaches to maxmin subarray sum problems generally have O(n^2) quadratic time complexity You can see an example of this in my Kadane's Algorithm article Exponential Complexity O(2^n)So there must be some type of behavior that algorithm is showing to be given a complexity of log n Let us see how it works Since binary search has a best case efficiency of O(1) and worst case (average case) efficiency of O(log n), we will look at an example of the worst case Consider a sorted array of 16 elements

Big O Notation And Algorithm Analysis With Python Examples Stack Abuse

Time Complexity Complex Systems And Ai

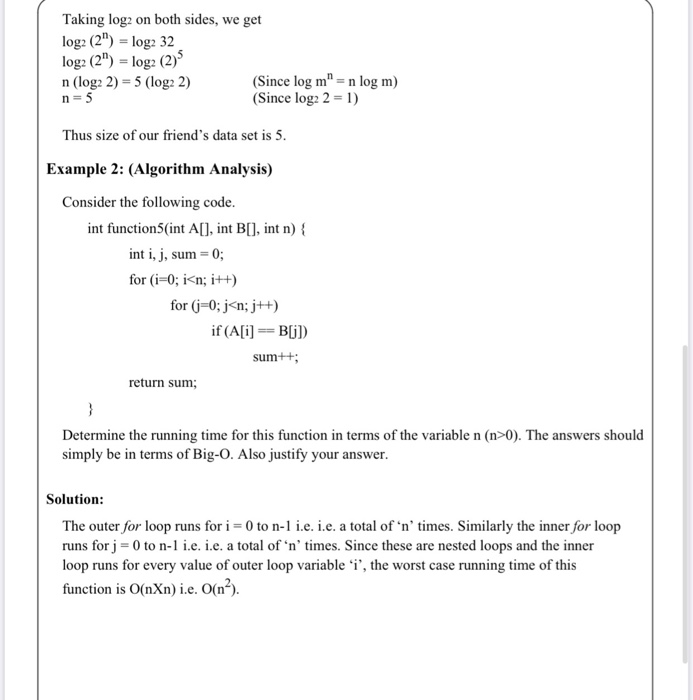

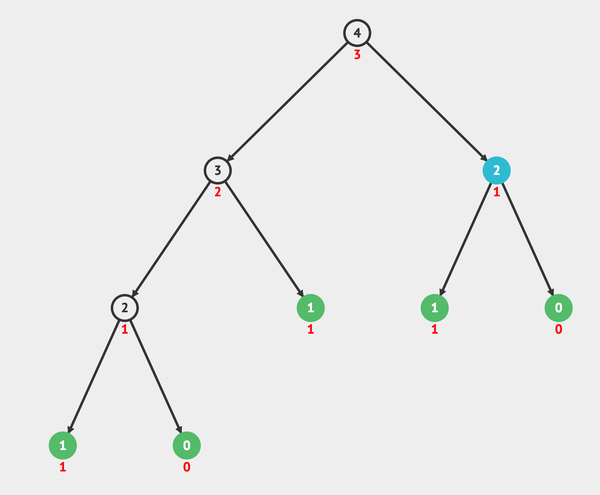

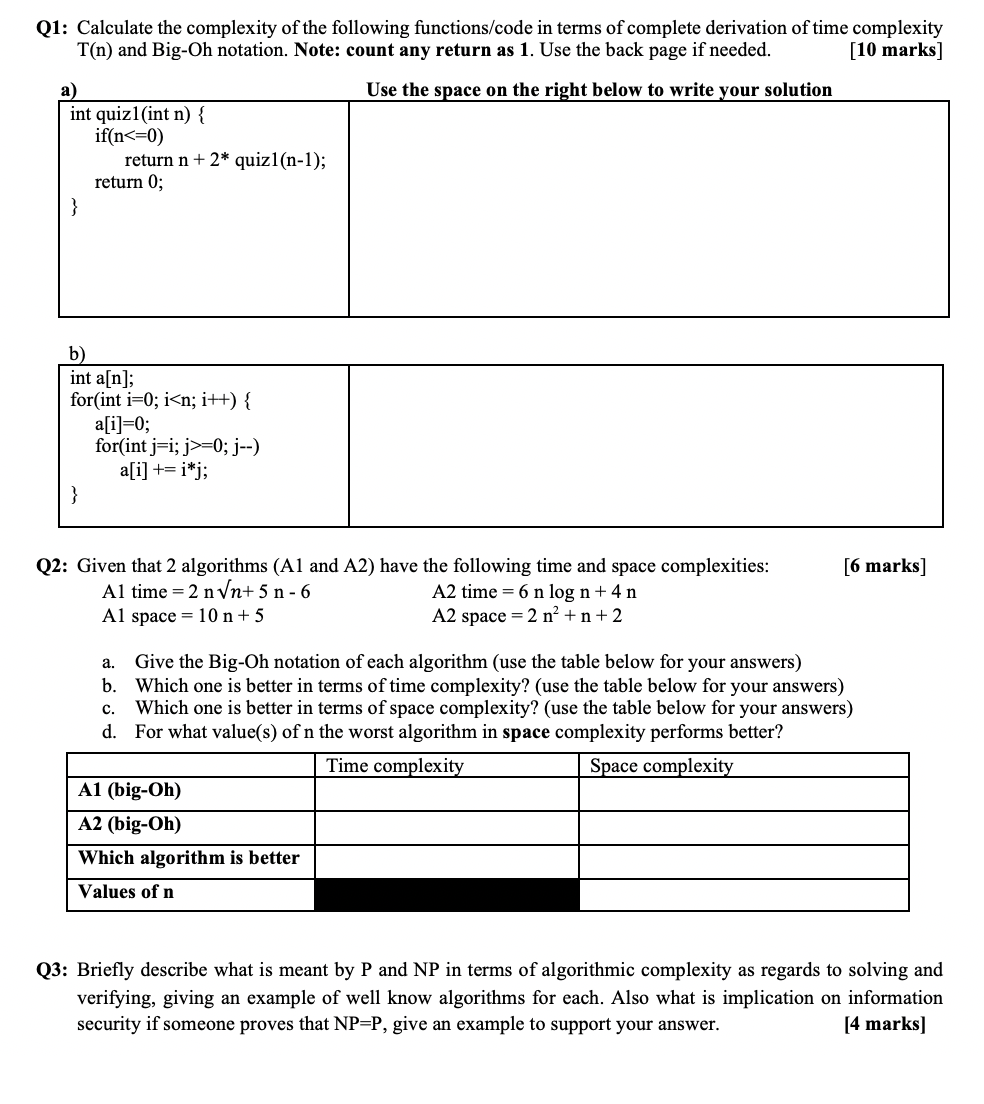

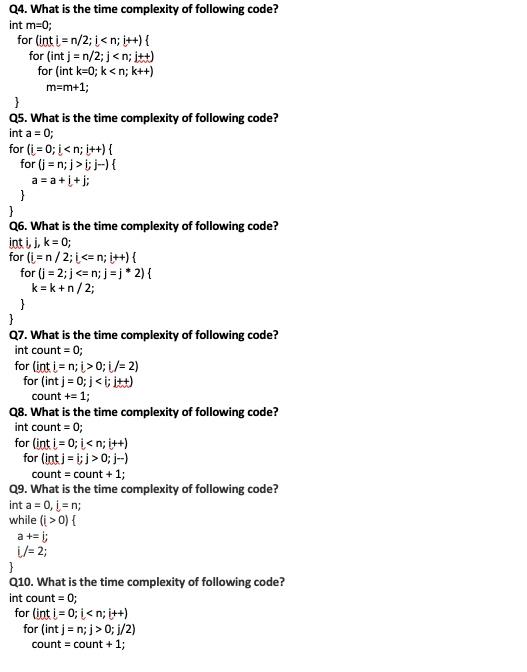

The recurrence relation for above is T ( n) = T ( n − 1) T ( n − 2) The run time complexity for the same is O ( 2 n), as can be seen in below pic for n = 8 However if you look at the bottom of the tree, say by taking n = 3, it wont run 2 n times at each level Q1That is a very good question indeed Both of the n!The outer loop executes N times and inner loop executes M times so the time complexity is O(N*M) 2 for (i = 0;

8 Time Complexities That Every Programmer Should Know Adrian Mejia Blog

Efficiency Springerlink

And 2^n functions are pretty huge in terms of their orders of growth and that is what makes them different from each other Not quite getting what I'm saying? There is often a timespace tradeoff involved A case where an algorithm increases space usage with decreased time or vice versa Examples Problem 3 Time Complexity2 n) Binary search ( n) Sequential search ( nlog 2 n) Merge sort ( n2) Selection sort ( 2n) Factor an integer ( n!) Traveling salesman problem Robb T Koether (HampdenSydney College) Time Complexity Wed, 10 / 39

A Simple Example Finding The Maximum Of A Set S Of N Numbers Ppt Video Online Download

Algorithm Time Complexity Of Iterative Program Example 11 Youtube

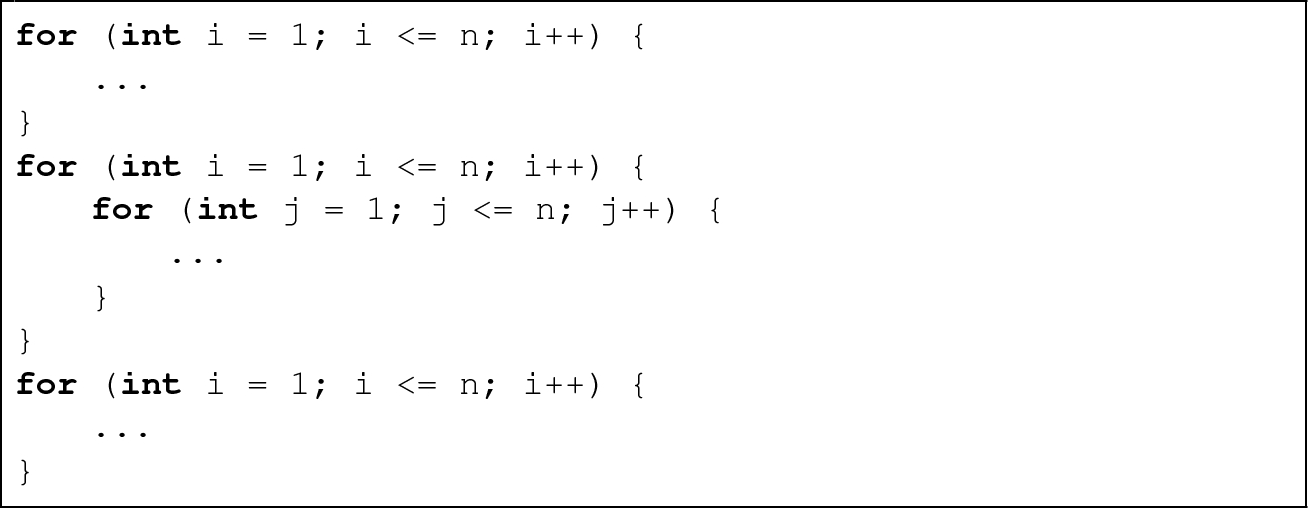

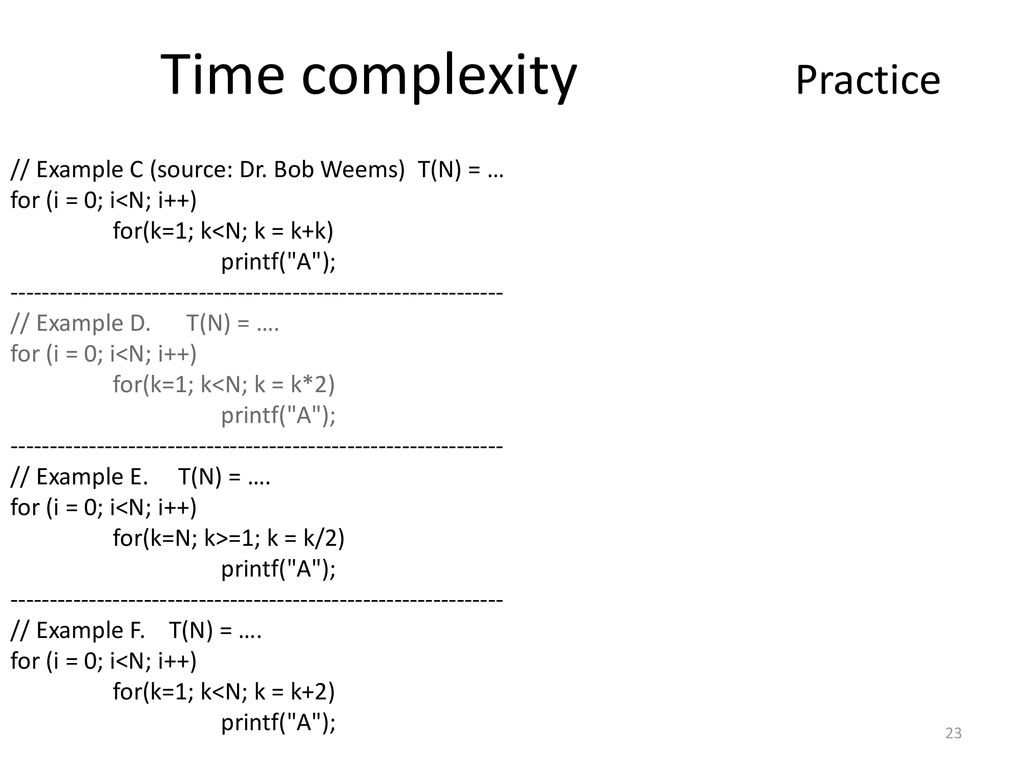

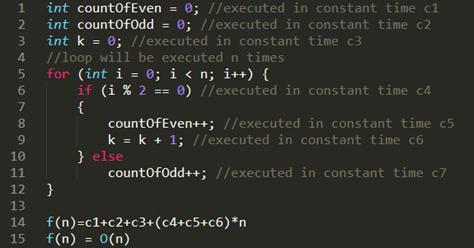

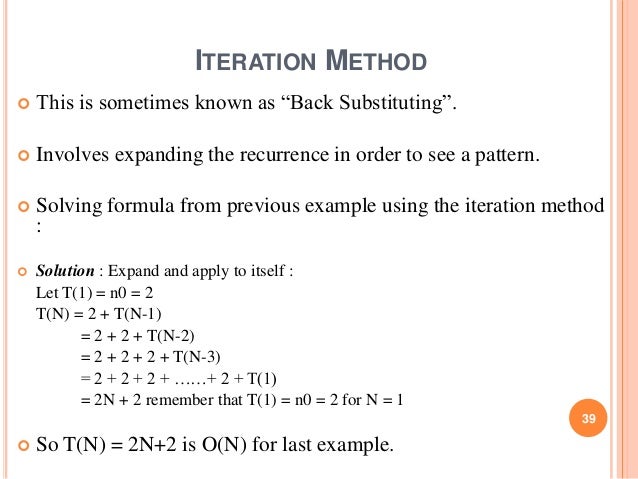

J) { sequence of statements of O(1) }} Now the time complexity is O(N^2) This video contains examples of time complexity ogN, NlogN, 2^n Comments are turned off Learn more Autoplay When autoplay is enabled, a suggested video willT(N) = 3*O(1) 4*T(N2) T(N) = 7*O(1) 8*T(N3) T(N) = (2^(N1)1)*O(1) (2^(N1))*T(1) T(N) = (2^N 1)*O(1) T(N) = O(2^N) To actually figure this out, you just have to know that certain patterns in the recurrence relation lead to exponential results Generally T(N) = C*T(N1) with C > 1means O(x^N) See

Data Structures Performance Analysis Ppt Video Online Download

Big O Notation Wikipedia

Take a look at the table down belowQuicksort(list, left, pivot 1);The running time of the algorithm is proportional to the number of times N can be divided by 2 (N is highlow here) which is log(n) in binary Worse, better and average We can calculate for most algorithms a complexity in the worst case (the greatest number of elementary operations), the best and on average

Performance Analysis Of Algorithms What Is Programming Programming

Essential Programming Time Complexity By Diego Lopez Yse Towards Data Science

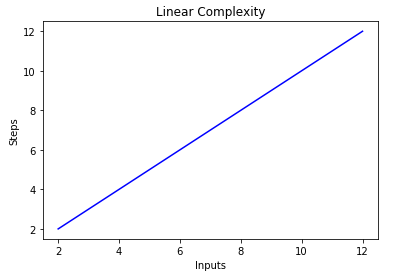

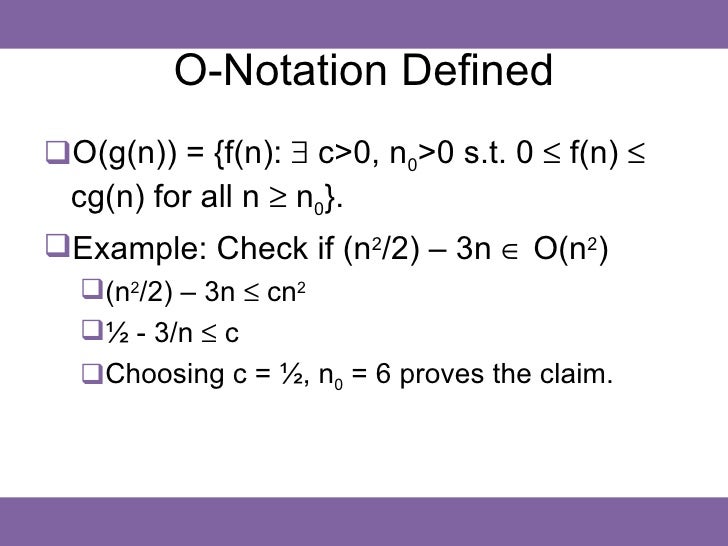

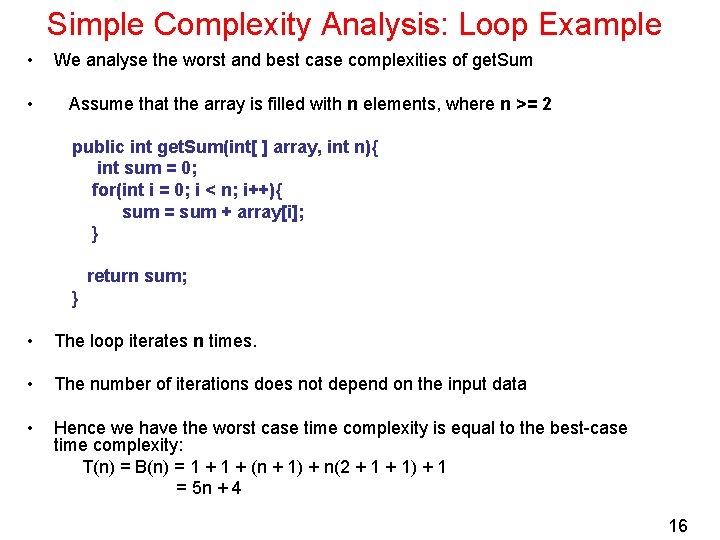

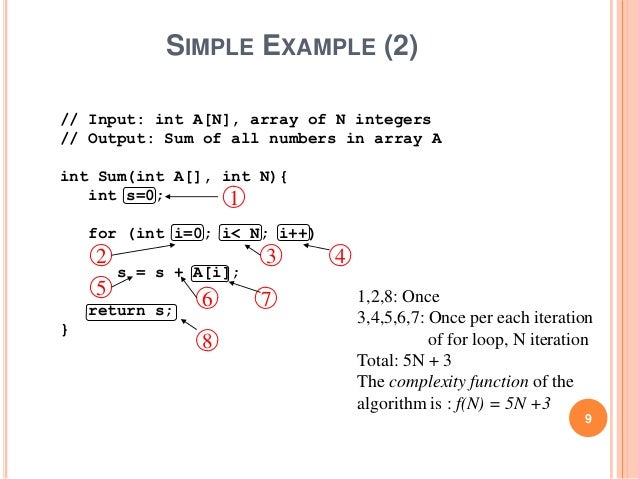

G(n) = n2 n f(n) g(n) ⌈f(n)/g(n)⌉ 1 4 1 4 10 121 100 2 100 101 2 This table suggests trying k = 1 and C = 4 or k = 10 and C = 2 Again, proving either one is good enough to prove bigOh CS 2233 Discrete Mathematical Structures Order Notation and Time Complexity – 12 Example 2, Slide 2 Try k = 1 and C = 4 Tsum=1 2 * (n1) 2 * n 1 = 4n 4 =C1 * n C2 = O(n) 3Sum of all elements of a matrix For this one the complexity is a polynomial equation (quadratic equation for a square matrix) Matrix nxn => Tsum= an 2 bn c For this Tsum if in order of n 2 = O(n 2)The fast version of exponentiation bn for int n ≥ 0 O(n) Linear time Algorithms whose running time is linear in n

92kssqb6hhfmpm

Time And Space Complexity Performance Analysis Academyera

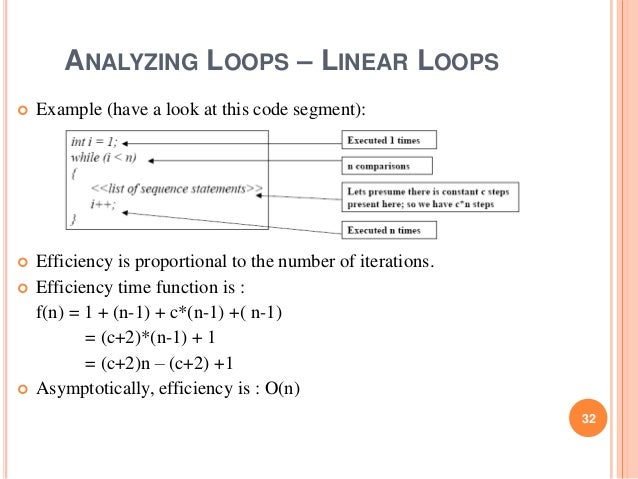

Definition When we compute the time complexity T(n) of an algorithm we rarely get an exact result, just an estimateThat's fine, in computer science we are typically only interested in how fast T(n) is growing as a function of the input size nFor example, if an algorithm increments each number in a list of length n, we might say "This algorithm runs in O(n) time and performs O(1)See complete series on recursion herehttp//wwwyoutubecom/playlist?list=PL2_aWCzGMAwLz3g66WrxFGSXvSsvyfzCOWe will analyze the time complexity of recursiveIn computer science, peek is an operation on certain abstract data types, specifically sequential collections such as stacks and queues, which returns the value of the top ("front") of the collection without removing the element from

Time And Space Complexity Aspirants

Analysis Of Algorithms Set 3 Asymptotic Notations Geeksforgeeks

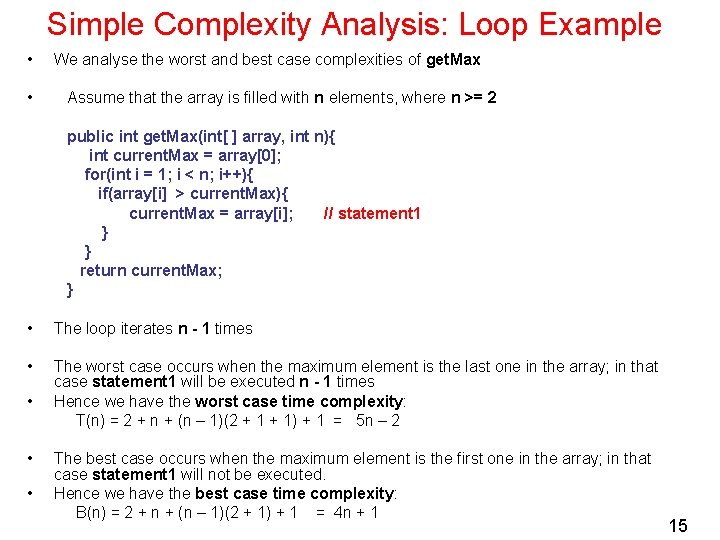

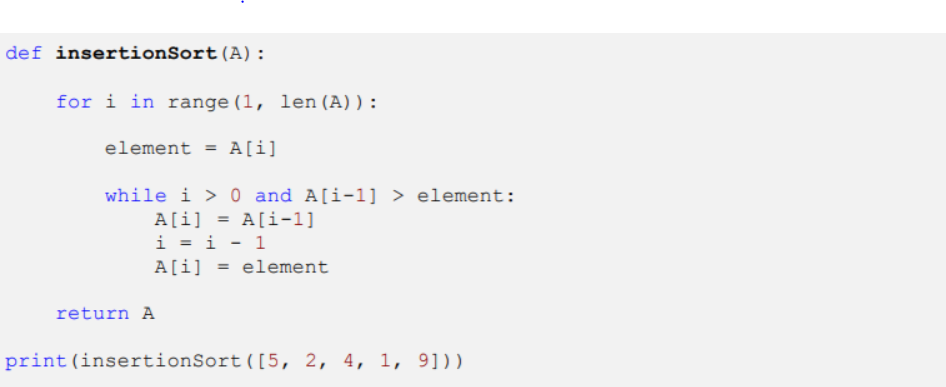

Time and Space Complexity In this article, I am going to discuss Time and Space Complexity with Examples Please read our previous article where we discussed Abstract Data Type (ADT) in detail Time and Space Complexity is a very important topic and sometimes it is difficult for the students to understand even though it is not that difficultQuicksort(list, pivot 1, right);N(n1)/2 Output 3 n(n1)/2 Explanation First for loop will run for (n) times and another for loop will be run for (n1) times so overall time will be n(n1)/2 10 Algorithm A and B have a worstcase running time of O(n) and O(logn), respectively Therefore, algorithm B always runs faster than algorithm A True;

Big O Notation Understanding Time Complexity Using Flowcharts Dev Community

Time Complexity For Loops Ppt Download

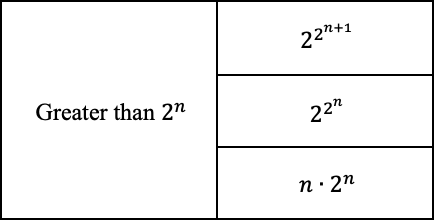

see 2^n and n2^n as seen n2^n > 2^n for any n>0 or you can even do it by applying log on both sides then you get nlog(2) < nlog(2) log(n) hence by both type of analysis that is by substituting a number using log we see that n2^n is greater than 2^n as visibly seen so if you get a equation like O ( 2^n n2^n ) which can be replaced as O ( n2^n)• An instruction is a method call => do not count it as 1 instruction See time complexity of method • 3 level nested loops – BigOh briefly – understanding why we only look at the dominant term • Terminology and notation – 1 > N will stand for 1,2,3,4,(N1), N – log 2 N = lg N – Use interchangeably • Runtime and This is an example of Quadratic Time Complexity O(2^N) — Exponential Time Exponential Time complexity denotes an algorithm whose growth doubles with

How To Calclute Time Complexity Of Algortihm

Analysis Of Algorithms Wikipedia

Assume (nk)th is 0th task means n=k T(n) = T(0) n T(n) = 1 n ⋍ n hence Time Complexity = 𝘖(n) Example 2What is the time complexity of knapsack problem?2 n 2 1 2 n (the explanation is in the exercises) When calculating the complexity we are interested in a term that grows fastest, so we not only omit constants, but also other terms (1 2 n in this case) Thus we get quadratic time complexity Sometimes the complexity depends on more variables (see example below) 36 Linear time

1

1

Time complexity applies to algorithms, not sets Presumably, you mean to ask about the time complexity of constructing the powerset The powerset for a set of size n will contain 2 n subsets of size between 0, n) The average size of the element of the power set is n / 2 This gives O (n ∗ 2 n) for the naive construction timeI) { for (j = 0;

Learning Big O Notation With O N Complexity Dzone Performance

Time Complexity What Is Time Complexity Algorithms Of It

Time Complexity Examples Example 1 O N Simple Loop By Manish Sakariya Medium

Analysis Of Algorithms Wikipedia

8 Time Complexities That Every Programmer Should Know Adrian Mejia Blog

8 Time Complexities That Every Programmer Should Know Adrian Mejia Blog

Essential Programming Time Complexity By Diego Lopez Yse Towards Data Science

8 Time Complexities That Every Programmer Should Know Adrian Mejia Blog

Big O Part 4 Logarithmic Complexity Youtube

How Is The Time Complexity Of The Following Function O N Stack Overflow

Beginners Guide To Big O Notation

Cs 340chapter 2 Algorithm Analysis1 Time Complexity The Best Worst And Average Case Complexities Of A Given Algorithm Are Numerical Functions Of The Ppt Download

Time Complexity Examples Example 1 O N Simple Loop By Manish Sakariya Medium

Analysis Of Algorithms Big O Analysis Geeksforgeeks

What Is Big O Notation And Why Do We Need It By Gulnoza Muminova Medium

Algorithms 1 This Is The Backward Substitution Chegg Com

Complexity Analysis Part I Motivations For Complexity Analysis

Determining The Number Of Steps In An Algorithm Stack Overflow

Data Structure Asymptotic Notation

Calculate Time Complexity Algorithms Java Programs Beyond Corner

What Is Difference Between O N Vs O 2 N Time Complexity Quora

Big O Notation Explained With Examples Codingninjas

Complexity Analysis Of Binary Search Geeksforgeeks

Complexity Analysis Part I Motivations For Complexity Analysis

Time Complexity What Is Time Complexity Algorithms Of It

How To Calclute Time Complexity Of Algortihm

Statement Purpose Purpose Of This Lab Is To Chegg Com

Big O Notation And Algorithm Analysis With Python Examples Stack Abuse

Understanding Time Complexity With Python Examples By Kelvin Salton Do Prado Towards Data Science

Time Complexity Examples Example 1 O N Simple Loop By Manish Sakariya Medium

L3ug98a7dy Hgm

Algorithm Time And Space Complexity Programmer Sought

I Need T N Running Time Complexity For This Chegg Com

.jpg)

8 Time Complexities That Every Programmer Should Know Adrian Mejia Blog

Time Complexity Examples Example 1 O N Simple Loop By Manish Sakariya Medium

Time Complexity Sort The Following Functions In Chegg Com

Big O Notation Breakdown If You Re Like Me When You First By Brett Cole Medium

Calculate Time Complexity Algorithms Java Programs Beyond Corner

Time And Space Complexity Analysis Of Algorithm

Big Oh Applied Go

Search Q Time Complexity Of If Statement Tbm Isch

Big O Notation Understanding Time Complexity Using Flowcharts Dev Community

8 Time Complexities That Every Programmer Should Know Adrian Mejia Blog

Lecture 03 04 Program Efficiency Complexity Analysis By

Big O Notation Article Algorithms Khan Academy

What Is Big O Notation Explained Space And Time Complexity

Algorithm Complexity Delphi High Performance

Big O How Code Slows As Data Grows Ned Batchelder

Big O Notation Definition And Examples Yourbasic

Calculate Time Complexity Algorithms Java Programs Beyond Corner

Time Complexity What Is Time Complexity Algorithms Of It

8 Time Complexity Examples That Every Programmer Should Know By Adrian Mejia Medium

Cs 340chapter 2 Algorithm Analysis1 Time Complexity The Best Worst And Average Case Complexities Of A Given Algorithm Are Numerical Functions Of The Ppt Download

What Does O Log N Mean Exactly Stack Overflow

Big O Notation Definition And Examples Yourbasic

Algorithm Time Complexity And Big O Notation By Stuart Kuredjian Medium

How To Calclute Time Complexity Of Algortihm

The Concept Of Every Case Time Complexity Stack Overflow

Asymptotic Notations Theta Big O And Omega Studytonight

Algorithm Complexity Programmer Sought

Understanding Time Complexity With Python Examples By Kelvin Salton Do Prado Towards Data Science

In This Insertion Sort Algorithm For Example How Would I Prove The Algorithm S Time Complexity Is O N 2 Stack Overflow

Analysis And Design Of Algorithms Ppt Video Online Download

Algorithms Design And Complexity Analysis Algorithm Sequence Of

1

Understanding The O 2 N Time Complexity Dev Community

Calculate Time Complexity Algorithms Java Programs Beyond Corner

Q1 Calculate The Complexity Of The Following Chegg Com

2 2 Time Complexity Analysis Example Log2 N بالعربي Youtube

Time Complexity What Is Time Complexity Algorithms Of It

Example 2 Analyze The Worst Case Time Complexity Of Chegg Com

What Is Big O Notation Explained Space And Time Complexity

Time Complexity Of A Computer Program Youtube

Cs 340chapter 2 Algorithm Analysis1 Time Complexity The Best Worst And Average Case Complexities Of A Given Algorithm Are Numerical Functions Of The Ppt Download

Algorithmic Complexity

Determining The Number Of Steps In An Algorithm Stack Overflow

What Does O Log N Mean Exactly Stack Overflow

Time Complexity Examples Example 1 O N Simple Loop By Manish Sakariya Medium

What Is Big O Notation Explained Space And Time Complexity

Learning Big O Notation With O N Complexity Dzone Performance

Understanding Time Complexity With Python Examples By Kelvin Salton Do Prado Towards Data Science

Time Complexity S S Worksheet Algorithm Analysis Chegg Com

0 件のコメント:

コメントを投稿